Suppose that I have the following model:

and

are hidden and

is observed. This model is perhaps not as facile as it seems; the

‘s might be mixture components in a mixture model, i.e., you can imagine hanging other observations off of these variables.

Since are hidden, we usually resort to some sort of approximate inference mechanism. For the sake of argument, assume that we’ve managed to a.) estimate the parameters of the model (

) on some training set and b.) (approximately) compute the posteriors

and

for some test document using the other parts of the model which I’m purposefully glossing over. The question is, how do we make a prediction about

on this test document using this information?

Seems like an easy enough question; and for exposition’s sake I’m going to make this even easier. Assume that . Now, to do the prediction we marginalize out the hidden variables

By linearity, we can rewrite this as

where

And if, as usual, I wanted the log probabilities, those would be easy too,

But suppose I were really nutter-butters and I decided to compute the log predictive likelihood another way, say by computing instead of

It is worth pointing out that Jensen’s inequality tells us that the first term is a bound on the latter term. Because the

is a set of indicators, the log probability has a convenient form, namely

Applying the expectations yields

Jensen’s inequality is basically a statement about a first order Taylor approximation, i.e., when

is convex. When is this approximation good? We might attempt this by looking at the second order term. I will save you the arduous derivation:

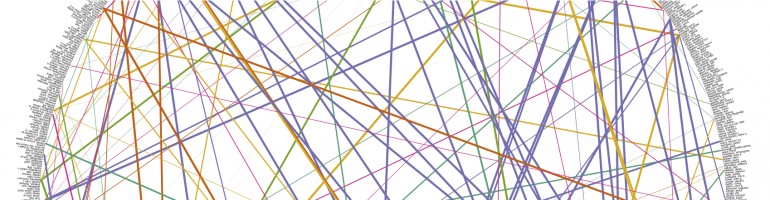

Now what better accompaniment to an equation than a plot. I’ve plotted the 2nd order approximation to the difference (solid lines) and the true difference (dashed lines) for different values of while keeping

fixed at

where

is the sigmoid function. The errors are plotted as functions of

I’ve also put a purple line at

that’s the actual log probability you’d see if your predictive model just guessed

with probability 0.5.

Moving the log into the expectation

First thing off the bat: the error is pretty large. For fairly reasonable settings of the parameters, the difference between the two predictive methods actually exceeds the error one would get just by guessing 50/50. For small values of the 2nd order approximation is pretty good, but for large values it greatly underestimates the error. Should I be worried (because, for example, moving the log around the expectation is a key step in EM, variational methods, etc.)?

I was motivated to do all this because of a previous post, in which I claimed that a certain approximation of a similar nature was good; the reason there was the low covariance or the random variable. Here, the covariance is large when the expectation is not close to either 0 or 1. Thus the second (and higher) order terms cannot be ignored. There are really two questions I want answered now:

- What happens if the random variable in this model is

rather than

?

- What happens if the random variable in the earlier post is

instead of

?