I was a little bit sneaky in my previous post and the reason I say this will become apparent when I change the function being approximated. Let’s break it down like this: there are three things we want to compare

and

And just to add a little more notation, let

and

In the previous post, I focused on and I was comparing (3) against both (1) and (2) by virtue of (1) and (2) being equal for this choice of f. I lamented that the approximation wasn’t so good. We can try to ameliorate the situation by adding second order terms. Let’s focus on going from (3) to (2). (2) is generally the easiest to compute as you only have to evaluate f at one point. (3) often occurs in the objective function of variational approximations. Now the second order term here is

(Note that this gives you the result I posted a fe posts back regarding the 2nd order approximation of $\latex f_\pi$) Adding this term in leads to the following results (same methodology as before):

Note that here we do much better than before. Except for a few errant cases we’re actually down almost an order of magnitude. We should be happy that we’ve got the problem licked. Ok, on to the next problem. Suppose we want to compare (1) and (2). Note that these are identical for by linearity. But for

they are not. Again, (1) is easy to compute but (2) is usually what we want for a predictive probability. The second order term here is simpler since we can disregard the log (as they appear on the outer most layer of both (1) and (2)):

Let’s run the same experiments again, except this time comparing (1) and (2) for

only.

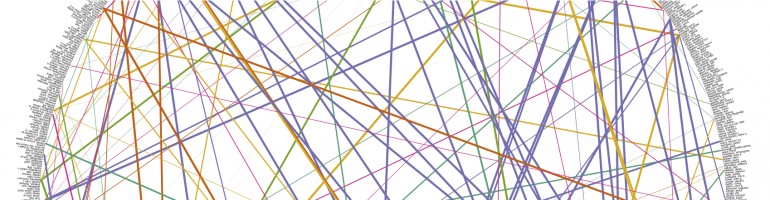

Error using a 1st order predictive approximation

Error using a 2nd order predictive approximation

With a first order approximation, we get some pretty significant errors. Using the second order approximation greatly reduces those errors (by an order of magnitude). It seems like the 2nd order approximation is a good idea for this application. Now the question is, will more accuracy here lead to a better performing model on real data?