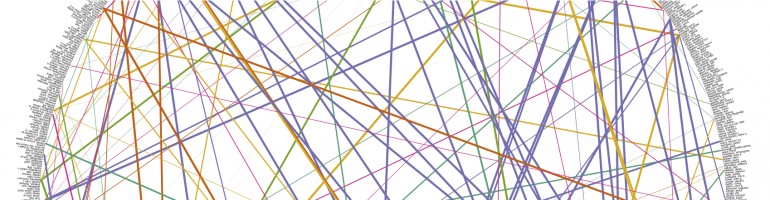

Thanks to all of you who’ve expressed interest in and support for our recent paper Reading Tea Leaves: How Humans Interpret Topic Models, which was co-authored with Jordan Boyd-Graber, Sean Gerrish, Chong Wang, and David Blei. Many people (myself included) either implicitly or explicitly assume that topic models can find meaningful latent spaces with semantically coherent topics. The goal of this paper was to put this assumption to the test by gathering lots of human responses to some tasks we devised. We got some surprising and interesting results — held-out likelihood is often not a good proxy interpretability. You’ll have to read the paper for the details, but I’ll just leave you with a teaser plot below.

Furthermore, Jordan has worked hard prepping some of our data for public release. You can find that stuff here.

I guess you could also try to compute word similarities based on the topics, and compare those to human word associations (using the USF free association data; http://w3.usf.edu/FreeAssociation/ ) to get an idea of the quality of the topics…

“Rational Analysis as a Link between Human Memory and Information Retrieval” by Steyvers & Griffiths takes that tack.